In my previous post I discussed how to join a Ubuntu 12.04 server to a Windows AD domain (so far I've tried it for both windows 2008 R2 and Windows 8 Server Beta AD domains)

In this post I discuss how to get Kerberos based single sign on working. I have tested this method with an openSSH client from Linux and with Putty from Windows.

- Install auth-client-config an kerberos libraries for PAM:

sudo apt-get install auth-client-config libpam-krb5

- Configure PAM for Keberos authentication:

sudo auth-client-config -t pam-password -p kerberos_example

It is very important that name resolution is working correctly as you could get issues if it doesn't work properly, thus an up to date DNS server is crucial.

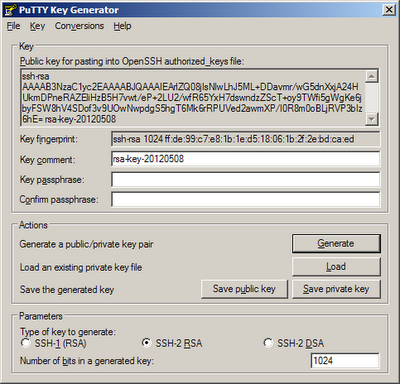

In order to test SSO from Putty, you need to ensure that you don't connect via IP address and that you set the auto-login username to a valid domain user, see below:

In order to test SSO from another Linux box do the following steps (note that depending on your distro you might have to install some packages):

- Get Kerberos ticket:

kinit domainuser@TEST.ORG

- Login:

ssh servername -l 'test\domainuser'

sudo /usr/sbin/sshd -p 31415 -d

You'll

need to allow traffic on port 31415 or the port you choose, which you

can easily do by stopping iptables. Clearly this should only be done in

servers that are not internet facing. If the server is internet facing

then just open port 31415, e.g:

You can connect to this server with:sudo iptables -I INPUT -p tcp --dport 31415 -j ACCEPT

ssh servername -p 31415 -vThis should tell you what the problem is, e.g:

debug1: Unspecified GSS failure. Minor code may provide more informationThis was actually caused by a name resolution problem.

Key table entry not found