These articles always frustrate me, because they clearly come from people in (comparatively) bad companies who think idiotic things about estimates, and as a result the authors need to write arguments against that idiotic thinking.This is an attempt to write an article about producing more accurate estimates, here is to you ghostfacedcoder.

But personally I've escaped those bad companies, and I could care less about reading more whining about estimates (NOT blaming OP: again people write about what's relevant to them ... it's just that that isn't relevant to me).

I'd like to see more articles about getting estimates more accurate, or organizing projects better around inaccurate estimates. You know, the stuff that's a lot harder to do than complain (although, again, I'm not faulting anyone for complaining, just saying what I'd like to read). But in my experience such articles are far more rare, and I guess that says something about the state of our industry.

Project Management Triangle

In project management and I'm not just referring to IT projects, there is a well known concept called the project management triangle, aka Scope Triangle, aka Triple Constraint. I was introduced to it at the local cobblers, who had a humorous sign on the wall which read the following (rough translation from the original):

A quick job well done won't be cheap

A cheap job well done won't be quick

So how can we do better? The answer is to spend more resources (time and/or people) on coming up with the estimates.

A cheap job quickly done won't be good.

Producing an estimate is no different and thus the quality of the estimate depends on the amount of resources spent on it.

It is true that there will always be spanners in the works, but this intuitively makes sense, after all if the project manager asks a developer for an estimate and hovers around waiting for said estimate, this gives little or no time to the developer to think about the potential issues that might arise from an off the top of his/her head design for a feature, which is likely to result in missed issues or an estimation for an approach that will not actually work.

So how can we do better? The answer is to spend more resources (time and/or people) on coming up with the estimates.

Caveat emptor

Firstly, I will put my hand up and say that this method that I am proposing is entirely theoretical as the three times I used it to come up with an estimate, the feature ended up never being developed so actual real world data is needed.

Secondly, coming up with the estimate took around 20% of the estimated time to generate said estimate, but as discussed later, some of this time would be saved from the development phase. This was for features that were estimated at around one person week.

Secondly, coming up with the estimate took around 20% of the estimated time to generate said estimate, but as discussed later, some of this time would be saved from the development phase. This was for features that were estimated at around one person week.

Thirdly, for any feature of reasonable complexity it simply isn't possible to be 100% accurate with an estimate all the time. Not even doing the feature and then re-doing it will give you an 100% accurate estimate all the time as on the second time around you'd probably apply what you've learnt the first time around and do it differently, which would likely lead to a different duration.

Fourthly, I realise that the resource expenditure might not always warrant the increase in accuracy but I think it can be useful in situations where there is a high risk to the business or trust needs to be repaired to give but some examples.

Finally, I think that the more familiar the team becomes with a codebase and the domain, the less time consuming this method will be.

There are various sources that contribute to uncertainty in an estimate but they can all be distilled down to one thing: Assumptions. What this method does is minimises the assumptions by spending time doing some coding.

This is not doing the work to then tell the PM how long it will take to do (having already done the work) but it is doing some coding to ensure that the assumptions that we make in our estimate are reasonable.

While I think that this methodology works for estimating a feature to a great degree of accuracy it would not work very well for a bid. The upfront cost of this methodology is so high that I would be surprised that anybody would go along with it.

Fourthly, I realise that the resource expenditure might not always warrant the increase in accuracy but I think it can be useful in situations where there is a high risk to the business or trust needs to be repaired to give but some examples.

Finally, I think that the more familiar the team becomes with a codebase and the domain, the less time consuming this method will be.

There is Method in the Madness

This is not doing the work to then tell the PM how long it will take to do (having already done the work) but it is doing some coding to ensure that the assumptions that we make in our estimate are reasonable.

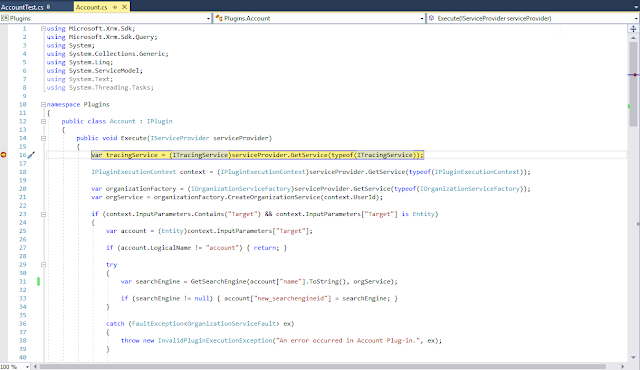

- Create a feature branch.

- Add failing unit tests.

- Add Interfaces/Classes with empty methods that have the right signatures (models included) so that your project compiles successfully.

- Estimate how long it will take to write each method, writing down each and every assumption you make as a comment on each method and/or class/interface.

- Submit PR to enable somebody to validate your assumptions.

- PR Reviewer validates assumption and also estimates development time

The idea is to have a separate branch to enable pseudo development.

If you don't use TDD you can ignore this step.

The whole idea is predicated on this step as it forces the developer to think about the design of the feature.

It is very important that the data models are added here as getting the data needed might be half battle.

Make sure that your actual time estimate for each method is not written down in the code to avoid any possible anchoring effect.

This step is not necessary but it's another gate in the process to increase accuracy as a second pair of eyes might see different things, e.g. missing methods, unnecessary methods, entirely wrong approach etc..

A average or even a weighted average is taken of both estimates and this then becomes the team's official estimate. Let the PM have the bad news.